Vertical vs Horizontal Scaling in System Design

Learn the key differences between vertical and horizontal scaling in system design and how load balancers enable effective horizontal scaling.

In modern web applications, handling increasing traffic is a critical challenge. There are two primary ways to scale an application: vertical scaling and horizontal scaling.

In this article, we’ll explore both approaches, highlight their pros and cons, and discuss why horizontal scaling is generally preferred for high-traffic applications.

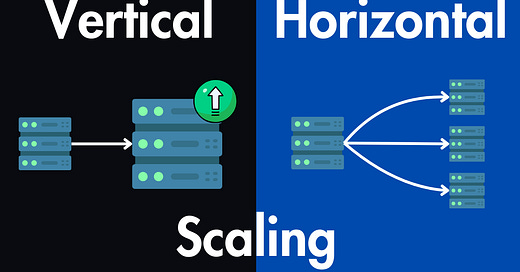

Vertical vs. Horizontal Scaling

Vertical Scaling (“Scale Up”)

Vertical scaling means adding more resources — such as CPU, RAM, or storage — to an existing server. This approach is straightforward and works well for applications with low to moderate traffic. However, it has some major limitations:

Resource Limits: Every server has a maximum capacity. Once you hit that limit, further scaling isn’t possible without moving to a more powerful machine.

Single Point of Failure: If the server goes down, the entire application becomes unavailable.

Higher Costs: Powerful hardware upgrades can be expensive, making vertical scaling less cost-effective over time.

While vertical scaling is an option, it’s often limited. Let’s explore why horizontal scaling is typically the better choice.

Horizontal Scaling (“Scale Out”)

Horizontal scaling, on the other hand, involves adding multiple servers to distribute the load. This method is commonly used by large-scale applications because it provides:

Higher Fault Tolerance: If one server fails, others continue handling requests, ensuring availability.

Better Scalability: You can add or remove servers as needed, making it a flexible solution for fluctuating traffic.

Improved Performance: More servers mean better load distribution, reducing bottlenecks and latency.

Although horizontal scaling introduces added complexity in managing multiple servers, it remains the preferred approach for high-traffic applications due to its resilience and flexibility.

The Role of a Load Balancer

To effectively implement horizontal scaling, we need a load balancer to distribute traffic across multiple servers.

But what exactly is this load balancer and how does it work?

How a Load Balancer Works

A load balancer sits between users and servers, ensuring requests are handled efficiently. Here’s how it works:

Traffic Distribution: It receives user requests and evenly distributes them across available servers.

Fault Tolerance: If one server fails, the load balancer redirects traffic to healthy servers, preventing downtime.

Scalability: When traffic increases, new servers can be added, and the load balancer automatically directs requests to them.

Example Scenarios

To better understand how horizontal scaling and load balancers work in real-world scenarios, consider the following cases:

Scenario 1: Server Failure

If one server unexpectedly crashes, the load balancer detects the failure and routes traffic to the remaining servers, keeping the application online without user disruption.

Scenario 2: Traffic Surge

During peak hours or unexpected traffic spikes, additional servers can be added dynamically. The load balancer will automatically distribute traffic among them, preventing slowdowns or crashes.

Key Takeaways

Vertical scaling enhances a single server’s capacity but has physical and cost limitations.

Horizontal scaling adds multiple servers, making it the preferred choice for high-traffic and fault-tolerant applications.

Load balancers ensure smooth traffic distribution, redundancy, and scalability in horizontally scaled architectures.

While horizontal scaling is often the go-to for large applications, vertical scaling can still be a good option for smaller systems or when you need a quick solution without the complexity of managing multiple servers.

Check out this article next to learn more about load balancing strategies and algorithms.

Want to dive deeper into scaling strategies and real-world implementations? Check out my Free System Design Course.